We can be setting up multiple application servers for redundancy. There we consider the fact that efficiently distributing income service requests or network traffic across a group of back ends servers, here almost e use the mechanism named load balancing.

This process which is load balancing is included several advantages as follows.

- Increased application availability through redundancy

- Increased reliability

- Increased scalability

- Brings about improve application performance and etc.

While you go through this article about the ultimate Guide to secure, harden and improve performance ao Nginx web server also will be helping you to get maximum output through your workflow.

To distribute incoming network traffic and workload among a group of application servers, e can use Nginx as an efficient HTTP load balancer. In each situation, it will be returned up a response by the selected server and it will be caught by the appropriate client.

You can see the Nginx supported load balancing methods as follows.

- round-robin- This is the method that is going to distribute the requests to the application servers in a way of the round-robin. The situations where no method specialized, this round-robin method will be default added.

- least-connected- Assignment of the next request to the less busy server which has less number of active connections will be done through this method.

- ip-hash- In this method use the hash function to determine which server should be selected for the next request based on the client’s IP address. Session persistence allowed to occur in this method.

At the more advanced levels, using server weights, you can influence the Nginx load balancing algorithms. Nginx is also support for health checking purposes by marking those servers as failed. The response that Nginx gave that, is ‘fail’ with an error, it going to be not to select that server for the above-mentioned purposes.

So we are sure that now you are under the correct guide for how to use Nginx as an HTTP Load balancer. Also, this will guide you to distribute incoming client requests between two servers by using t Nginx.

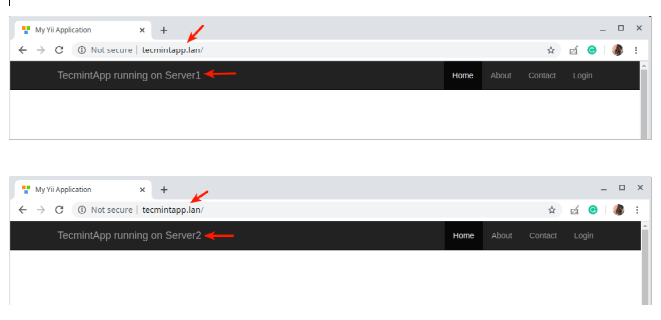

Here each application instance is labeled for testing purposes. By this labeling, indicates the server that runs on.

Testing environment Setup.

Load Balancer: 192.168.58.7 Application server 1: 192.168.58.5 Application server 2: 192.168.58.8

using the domain tecmintapp. lan , each application instance that belongs to the application server, is configured to be accessible. With the assumption which is this domain is fully registered, we going to add up the following DNS settings.

A Record @ 192.168.58.7

Here’s this data set that tells the thing about client requests. It tells the place where the should be domain going to be direct, in this scenario load balancer is 192.168.58.7 . Another thing is you need to attend to is, these DNS A records are only accepted IPv$ values only. For testing processes alternatively, we can use /etc/hosts files on client machines. To do that follow this command.

192.168.58.7 tecmintapp.lan

Setting up Nginx Load Balancing in Linux.

Before going through this flow, you may need to firstly install the Nginx on your server. Here you can use the default package manager that belongs to your distribution. Here you can see it further below.

$ sudo apt install nginx [On Debian/Ubuntu] $ sudo yum install nginx [On CentOS/RHEL]

Then you may need to create a file which is a ‘ server block ‘ file called /etc/nginx/conf.d/loadbalancer.conf

(Here you should give the name of your selection)

$ sudo vi /etc/nginx/conf.d/loadbalancer.conf

After that copy the following configuration and paste it into it. This configuration has no load balancing method defined, so the round-robin method is by default assigning to it.

upstream backend {

server 192.168.58.5;

server 192.168.58.8;

}

server {

listen 80 default_server;

listen [::]:80 default_server;

server_name tecmintapp.lan;

location / {

proxy_redirect off;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header Host $http_host;

proxy_pass http://backend;

}

}In this case, using the referenced word ‘backend,’ the proxy_pass directive passes the request to the HTTP proxied server in an upstream directive. Here that referenced word should be specified inside a location, / in this configuration. Then using a weighted round-robin balancing method those requests will be distributed.

Using the following configuration can employ the least connection mechanism.

upstream backend {

least_conn;

server 192.168.58.5;

server 192.168.58.8;

}Then using the following configuration, enable the ip_hash session persistence.

upstream backend {

ip_hash;

server 192.168.58.5;

server 192.168.58.8;

}Here using server eights, you can involve the load balancing decision. If there arise six requests from clients, the assignment of the request will be as follow.

Four of the six requests are assigned to 192.168.58.5 and

Tow of the six requests is assigned to 192.168.58.8.

For that purpose, you can use the following configuration.

upstream backend {

server 192.168.58.5 weight=4;

server 192.168.58.8;

}If you are done with that save the file and exit from it.After those recent changes, make sure that the Nginx configuration structure stays correctly. To do that run the following command lines.

$ sudo nginx -t

To gain the effects of changes that you have made, restart, and enable the Nginx service.

$ sudo systemctl restart nginx $ sudo systemctl enable nginx

Testing Nginx Load Balancing in Linux

By navigating the following URL open the website interface. Here you are going to test the Nginx load balancing.

http://tecmintapp.lan

When the site interface load, pay attention to the application instance that loads from time to time. You may have to continuously refresh the page. If you are still on attention to the instances at some point you will see the app is loaded from the second server indicating load balancing.

Now you have the knowledge about how to set up Nginx as an HTTP load balancer in Linux. We are happy to know the things about this article from you as well as your impression of employing Nginx as a load balancer. For more information, you can go through this Nginx documentation which discusses Using Nginx as an HTTP load balancer

Now you have the knowledge about how to set up Nginx as an HTTP load balancer in Linux. We are happy to know the things about this article from you as well as your impression of employing Nginx as a load balancer. For more information, you can go through this Nginx documentation which discusses Using Nginx as an HTTP load balancer